A framework for designing intelligent agents

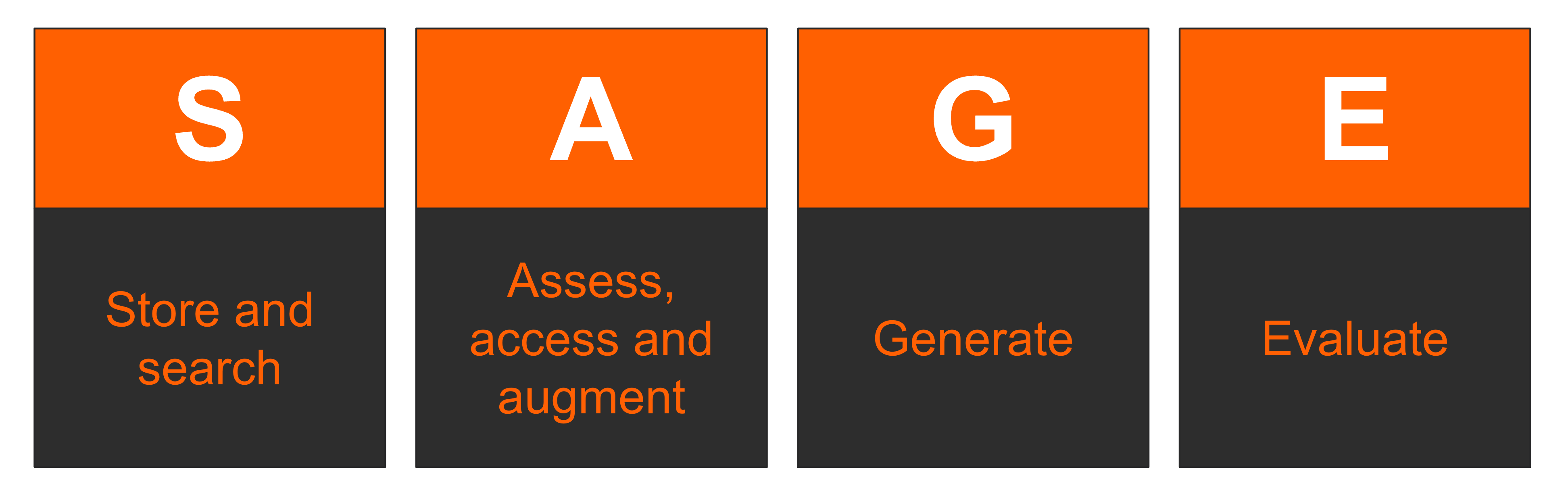

SAGE

LLMs, RAG and beyond

ChatGPT, Claude and Gemini are super powerful applications. And they are becoming more powerful every month.

Whilst they are powerful and general, they aren’t always immediately applicable to enterprise solutions without strategies for integrating data, aligning language and behaviours, and ensuring they’re reliable and performant for specific work-based tasks. This means LLMs such as GPT4, Llama3 and Mistral need to be extended.

Retrieval Augmented Generation (RAG) has become a standard pattern for connecting internal and proprietary data sources with powerful language models to create intelligent agents.

RAG is often discussed as a singular concept. In truth, many decisions must be made when considering the requirements of the intelligent agent. At Brightbeam, our SAGE framework helps us consider all of the key elements for ensuring that intelligent agents are fit for purpose for the task at hand. But RAG doesn’t do everything you need it to. Sometimes, you need to consider using multiple models and agents or fine-tuning for specific tasks or complex pre- and post-processing tasks.

This post is an initial overview of the framework to think through these decisions.

The right solution for the right problem

The first thing to consider is what the intelligent agent is supposed to do. We are already used to seeing very powerful, general-purpose chatbots like ChatGPT. You can ask them almost anything, and they will do a decent job. But in Enterprise workflows, you don’t necessarily need general purpose, you likely need specific and specialised knowledge.

For instance, if you have something that can scan paperwork, extract the right data, store that within Enterprise systems, take action from what is stored and kick off workflows with human colleagues, this has a different set of requirements than an email writer or a customer chatbot. It’s always essential to look at how you believe an intelligent agent will be used by people (if at all) and what the right interface (chat or otherwise) is.

Considerations for design

SAGE examines several aspects of the architecture and design of AI-based agents. We will explore each of these.

Store and search

Store and search are linked concepts. How we store something – the strategy we decide on – and what information we need to complete specific tasks will impact our search and retrieval strategies.

Some decisions we will need to make:

- What information will drive our agent? Do we need to ingest a lot of information before we deploy it so that it can do the job?

- Is there information we need to store and have access to that is more dynamic? I.e. from databases, up-to-date news

- When we ingest new data, do we need to enrich it and store it in ways that add meaning later on?

We must consider how we connect unstructured knowledge from wikis, documents, email or other written sources with structured data such as CRM or Operational data sources as examples. The way we store data using things like Vector Databases will impact how easy to find and retrieve the right information when interacting with our agent. There are loads of technical choices, and the decisions will likely be driven by existing infrastructure decisions (AWS, Azure, GCP, and others) and databases. There are now many new vector databases that specifically support LLMs and intelligent search, which are very good.

Search encompasses everything we need to identify, find and retrieve for our intelligent agent to do its job effectively. This could be from internal systems or external data sources. It might be something in the intelligent agent or completely from the outside. Search is all about balancing speed and correctness in order to do a task. It might also be part of a process that prompts the intelligent agent to ask more questions before they have all the necessary information to do their job effectively.

Assess, access and augment

Assess examines how we should treat search results. We need to decide if we think the information we have is trustworthy, accurate, and reliable enough to execute a task or generate an answer.

Often, search is about figuring out the right haystacks to dig in to find the perfect needle. Assess takes the needle-finding one step further and determines whether it’s the right needle or whether the agent needs more information.

In many companies, certain pieces of information aren’t for public consumption or use. We want agents that are capable of using as much information as possible, but we must also ensure that the user of the agent has the appropriate credentials and access. Access can be determined at all points when interacting with an intelligent agent. Access controls can be built in at design time – i.e. don’t give an agent information it shouldn’t be using – or in runtime – i.e. does the person I’m currently interacting with have the appropriate permissions to know what I’ve found?

Augmented generation is about providing the right context for the intelligent agent to do the job. In reality, you don’t want to give too much information as this can result in hallucinations, confabulations and misinformation. Too little, and it can’t do the job correctly. There are also cost and speed implications of providing too much information. Now we have context windows that can support 1.4M words (or 2M tokens) it does mean LLMs have access to a lot of information, but that doesn’t come for free and will take time to process in every transaction.

There are also many ways that intelligent agents can be augmented—it might be through Prompt Engineering, Machine-Managed Prompting such as DSPy, or frameworks such as LangChain or LlamaIndex. Each choice has pros and cons, which we will cover in future posts.

Generate

Generate is the point where we end up using foundational, fine-tuned or bespoke models to do the job at hand. In a chatbot, this is used to interact with the user. For some other intelligent agents, it might be to execute a task. The approach to generation, prediction, summarisation or any other discriminative or generative approach will be dictated by the task at hand. We must decide what the best model is for speed, accuracy, latency and cost.

We always have the choice of running multiple models chained together with processing between them. We can have one model check the output of another model. We can have them collaborate with each other. We can use the power of a general model like GPT4 and couple that with a fine-tuned bespoke model that really understands the Enterprise context and language. All of these are valid choices depending on the use case.

Evaluate

One of the toughest parts of any intelligent agent is to determine if the task you’ve just done is correct or not. Did you use the right inputs? Has the generation produced something that is coherent and appropriate for the end used?

If you’re about to give an answer back to a user, in some cases where accuracy is demanded, you have to be sure that you are giving them the right information. For many knowledge tasks, this requires corroborated information or some other way to triangulate whether the task is being done successfully. Our evaluation choices need to take this into consideration.

We consider and design appropriate benchmarks during the training and design process. But, we also consider how to corroborate answers with previous experiences and known good answers. For instance, if we have a customer service support AI, we might examine previous interactions with customers that were done with people to see how well the AI aligns. In some ways, this is how people work. We rely on previous experiences and knowledge to know whether what we are going to say is good or not.

Levels of accuracy and performance

We have levels for each element of SAGE that allow us to examine how much we need to build to ensure accuracy. But accuracy and precision come at a price. The price is often speed and cost, so you don’t always want to use the most sophisticated models, the longest context lengths, or multiple calls to different models. We will explore the different levels in following posts.